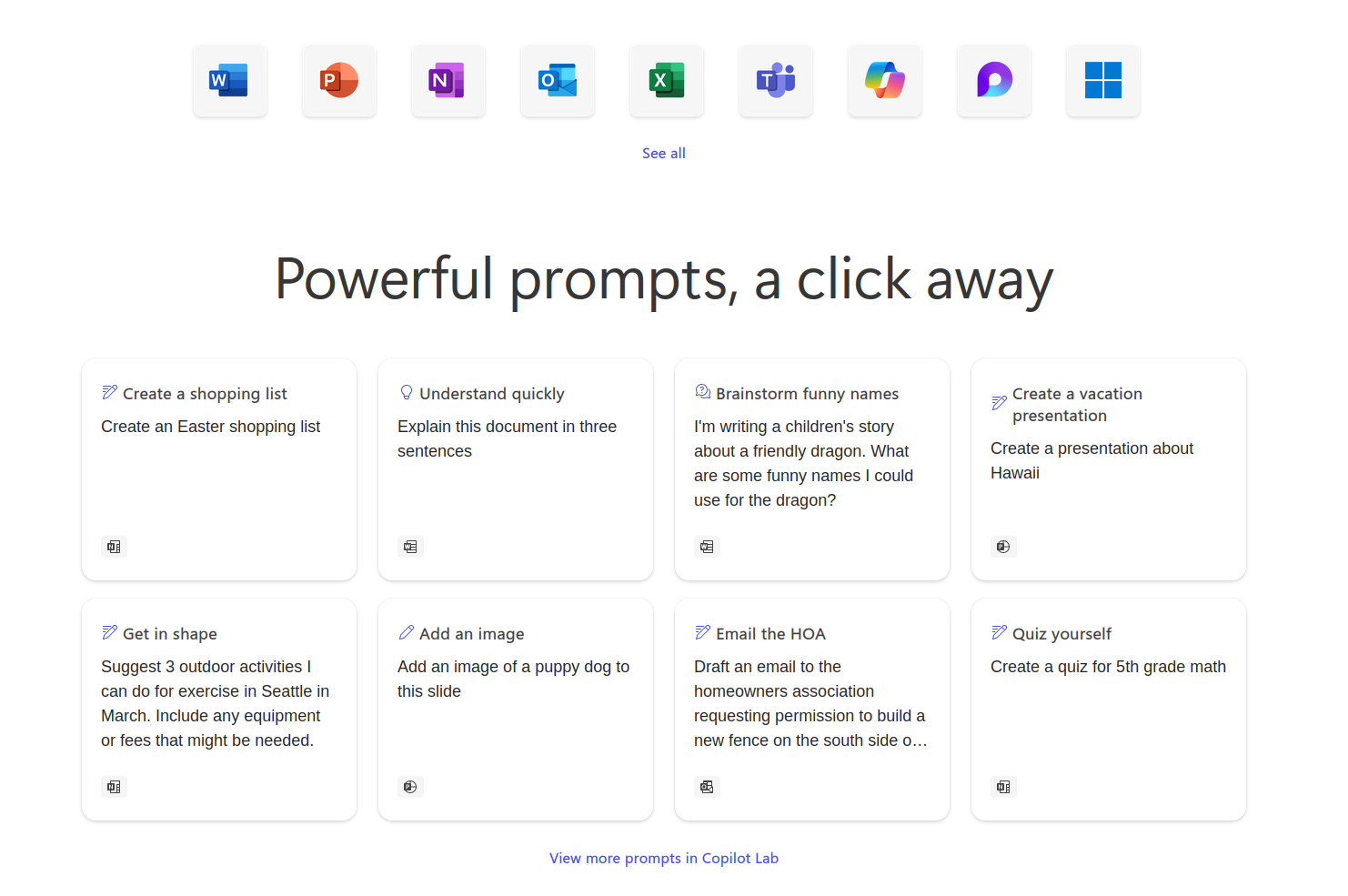

According to Intel executives, Microsoft is planning to run parts of Copilot locally on PCs. However, the challenge lies in the fact that much of the hardware lacks the necessary computing power to support this.

Currently, Copilot relies heavily on cloud computing. By shifting some of the processing locally, it is expected to reduce latency and enhance performance and privacy by eliminating the need to send data to the cloud. These details were shared by Intel executives at the company's AI Summit in Taipei as reported by Tom's Hardware.

However, hardware limitations remain a concern. The aim is to ensure that there is enough computing power to run parts of Copilot locally without affecting device speed or battery life. Intel executives suggest that utilizing a neural processing unit (NPU) rather than a GPU can help prevent drain on the battery.

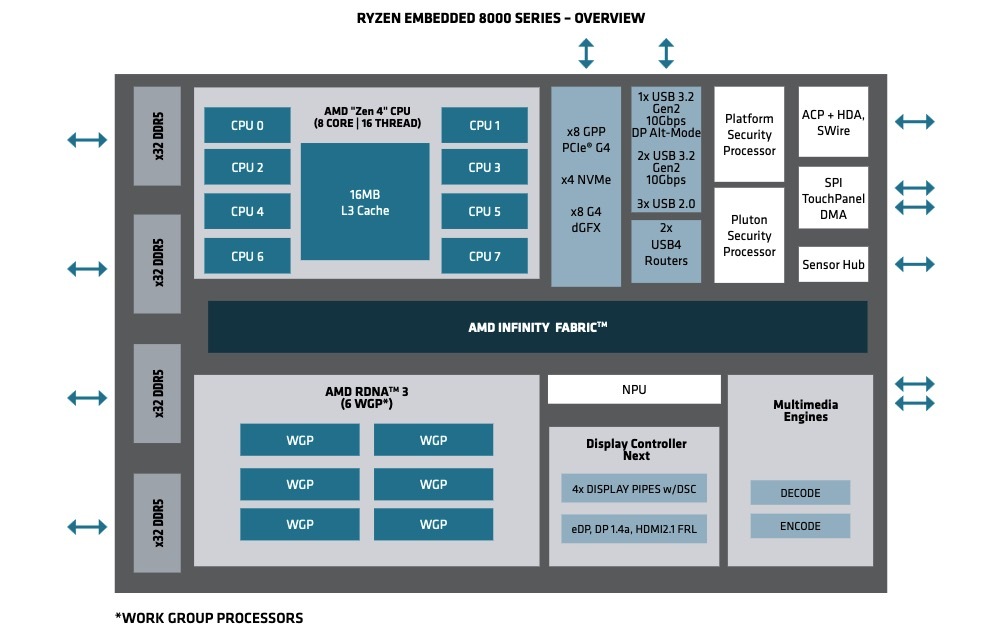

Microsoft is reportedly adamant about this requirement. TrendForce previously mentioned that AI PCs integrated with Copilot should have NPUs capable of achieving 40 tera operations per second. (tops). Intel has now confirmed this requirement, but there are limited options for such NPUs. Intel notes that their Core Ultra chips currently offer 10 to 15 tops, while AMD offers NPUs with up to 16 tops. The Qualcomm Snapdragon X Elite processor with 45 tops is reportedly the only commonly used processor for Windows that meets the criteria. Intel anticipates that the next generation of Core Ultra chips will also fulfill these requirements.

.png)

English (US)

English (US)